One of the most awaited moments at this year’s Collision was the appearance of Geoffrey Hinton, a cognitive psychologist and computer scientist who, thanks to his research on neural networks, is often referred to as the “Godfather of AI”. Two months ago, he chose to step down from his position at Google after 10 years to freely speak out about the risks of artificial intelligence. At the conference, in a Q&A with Nick Thompson, CEO of The Atlantic magazine, Hinton expanded on his concerns about the subject and took a deep look into AI’s potential impact on society. Here are 7 potential risks of artificial intelligence we have learned from Hinton:

1 – Language models are approaching human-level reasoning

“I don’t understand why they can do it, but they can do little bits of reasoning,” Geoffrey Hinton said. He talked about asking GPT-4 to solve a fairly complex puzzle for him, and it did it. “That’s thinking.” So, is there any cognitive process that machines will not be able to replicate? According to the professor, “we’re just a big neural net, and there’s no reason why an artificial neural net shouldn’t be able to do everything we can do.”

2 – The future may be controlled by AI

We are entering a period of huge uncertainty where nobody really knows what’s going to happen. And that is precisely why we have to take the following possibility seriously: “if they get smarter than us, which seems quite likely, and they have goals of their own, which also seems quite likely, they may well develop the goal of taking control. And if they do that, we are in trouble.

3 – AI may enhance social inequality

When we talk about LLMs, we must consider the big increase in productivity they will generate. According to Hinton, “in our society, if we get a big increase in productivity like that, the wealth is not going to the people who are doing the work. It’s going to make the rich richer and the poor poorer.” Dealing with a new situation like superintelligence will likely cause unprecedented changes. As for those who believe in the development of new job positions, he says, “I’m not sure how they can confidently predict that more jobs will be created than lost”.

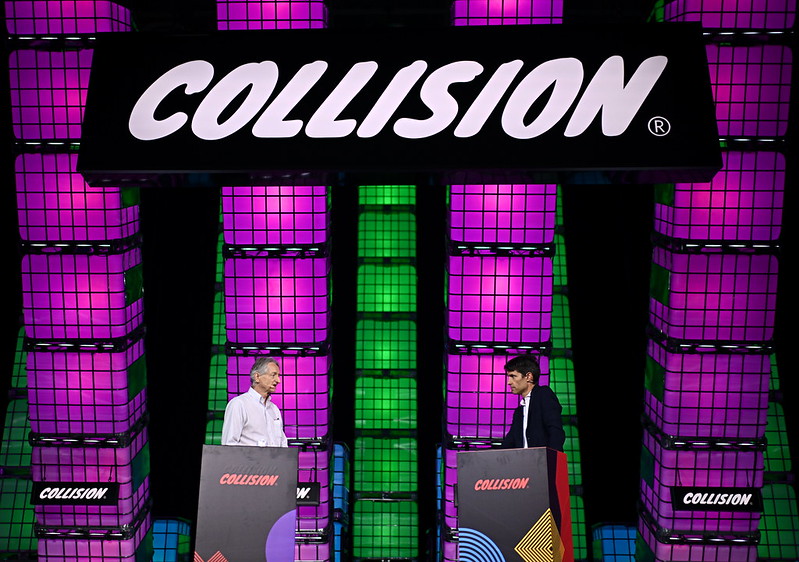

Geoffrey Hinton and Nick Thompson at Collision 2023. Photo by Ramsey Cardy/Collision via Sportsfile

4 – Plumbing is the type of career people should be looking at

If you’re considering a new career, choose carefully. We don’t know which jobs will become extinct because of generative AI, but Hinton has an idea. “The jobs that are going to survive AI for a long time are jobs where you have to be very adaptable and physically skilled. Plumbing is that kind of job. Manual dexterity is hard for machines.”

5 – The most impactful improvements in the next 5 years will be in multimodal large models.

In the near future, we are going to be looking at more than just language models. That is because language, as rich as it may be, is a limited vector of information, and it will soon be combined with other vectors. “It’s amazing what you can learn from language alone, but you are much better off learning from many modalities. Small children just don’t learn from language alone,” he stated.

6 – It’s not just science fiction.

Consider this: if AI becomes smarter than humans and develops manipulating skills, are you confident people will stay in charge? “I think it’s important that people understand it’s not just science fiction, it’s not just fear-mongering. It is a real risk that we need to think about, and we need to figure out in advance how to deal with it. Hinton said that before AI becomes superintelligent, the people developing it should be encouraged to put a lot of work into understanding how it might go wrong. The problem? “Right now, there are 99 very smart people trying to make it better and one very smart person trying to figure out how to stop it from taking over. And maybe you want to be more balanced.”

On a brighter note, Geoffrey Hinton does see the positive side of AI and he affirmed that progress is inevitable. But does the good outweigh the bad? “We seriously ought to worry about mitigating all the bad side effects of it and worry about the existential threat.”